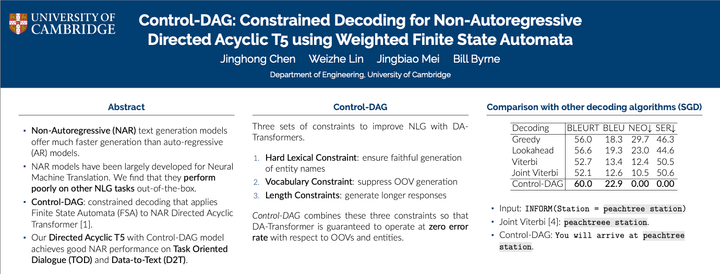

Control-DAG: Constraining Non-Autoregressive Text Generation with Weighted Finite State Automata (WFSA)

[4-minute read]

TL;DR. Non-autoregressive (NAR) models generate texts much faster than auto-regresssive (AR) models. However, we find previous NAR approaches, largely developed for Machine Translation, fail harshly when faced with Task-Oriented Dialogue and Data-to-Text. Our NAACL 2024 paper introduces Control-DAG, a constrained decoding algorithm that uses Weighted Finite State