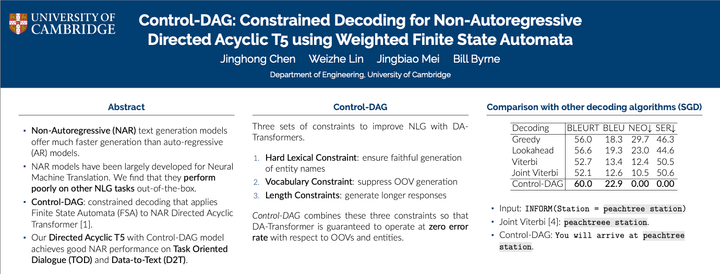

3-minute Pitch: Late-Interaction Knowledge Retriever (FLMR) for Visual Question Answering @NeurIPS 2023

Knowledge-based Visual Question Answering (KBVQA) aims to answer a question related to an image that requires some world knowledge. Here's an example.

Our NeurIPS paper takes the retrieval-augmented approach to tackles KBVQA. We first retrieve relevant documents from an external database and then generate answers based on the retrieved docuemnts. We introduce the first late-interaction knowledge retriever that handles queries of images and texts. Fine-grained Late-interaction Multimodal Retriever (FLMR), our model, outperforms Dense Passage Retriever (DPR) in knowledge retrieval by large margins on major Visual Question Answering (VQA) datasets. FLMR represents query/document as a matrix of token embeddings as opposed to DPR's single embedding. Relevance score is computed via "late-interaction" which efficiently aggregates all token-level relevance. This allows FLMR to capture finer-grained relevance between query and document for better retrieval.

FLMR Retrieval

Given a query \(\bar q\) consisting of an image and a related question, FLMR retrieves a document \(d\) useful for answering \(\bar q\) from a knowledge base. We use two types of vision features:

- Text-based vision: image caption from Oscar and object-detection outputs from VinVL

- Feature-based vision: embeddings of the image and 9 Region-of-Interests (ROIs) from the CLIP's Vision Encoder. A single-layer MLP is used to project feature-based vision into the text retriever's embedding space.

We use ColBERT as the late-interaction text retriever which computes the relevance score between \(\bar q\) and \(d\) as follows:

\[r(\bar q, d)=\sum_{i} \max_j Q_{i}D_{j}^T\]

For each query token \(Q_i\), we compute the dot product between the query token and all document tokens, selecting the highest score, \(\max_j Q_i D_j^T\). The final relevance score is the sum of these token-level max scores over all query tokens. This is late interaction, allowing full interaction between query and document tokens. The PLAID engine efficiently performs late interaction. FLMR is only 23% slower than DPR.

Retrieval-Augmented Visual Question Answering

For each query, top-5 documents are retrieved. We then generate one answer per retrieved document using a fine-tuned BLIP2. The candidate with the highest joint retrieval and generation probability is selected following RAVQA-v2.

Experiments and Results

We focus on the OK-VQA dataset where a large proportion of questions require outside knowledge. We also evaluate on KVQA and Infoseek. For retrieval, we compare Recall@5 against Dense Passage Retrieval (DPR) baseline. We also report final VQA scores and Exact Match (EM) rates.

Our main findings are:

- Late interaction brings \(>+6\) Recall@5 gains over DPR baselines.

- Feature-based Vision complements text-based vision, yielding +2 Recall@5.

- Recall@5 rises by 2.3 when 9 RoIs are added to FLMR, but decreases by 0.5 when the same RoIs are added to DPR. This shows FLMR can use the finer-grained vision information whereas DPR cannot.

- Our final VQA system with FLMR retrieval performs better than previous same-parameter-scale baselines and on par with PALI-17B while being over 10 times smaller.

Conclusion

We introduced FLMR, the first late-interaction knowledge retriever in a multi-modal setting. FLMR outperforms DPR by large margins on OKVQA, FVQA, and Infoseek. Here are the paper and the code. Leave a comment, shoot an email, or find us at NeurIPS poster #314 on Wed morning (Dec.13th) if you find this interesting!

The first author Weizhe Lin is the project lead. Jingbiao Mei is a main contributor. I am the joint first author of this work.

Comments ()